People are used to thinking about machine learning as some kind of sorcery. Especially when it gives an awesome result "out of nothing". The truth is that it's not really magic but technology. And background work makes it not as easy as with Hermione's wand flick.

When it comes to the technology driven by engineering and science, magic wand starts to behave not always and not entirely "magic" (in our case - smart and universal) to the demanding eye.

How are images being upscaled usually?

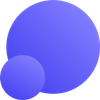

Image upscaling algorithms solve the task of filling missing pixels that contain information during the image enlargement process. The most common is a bicubic interpolation.

This method doesn't draw any details. It just simulates new pixels from the values of the surrounding ones using a formula. It works fast enough and looks more or less appealing to the human eye comparing with big chunky "pixels" created by more straightforward nearest neighbor or bilinear interpolations.

Images upscaled this way do not contain any "new" detail and appear blurry because of the formula which tries to find a tradeoff between pixelization artifacts and smoothing. There are several different bicubic kernels that may have different results for different kinds of images. But in general, they act the same.

Here is the example: https://blog.codinghorror.com/the-myth-of-infinite-detail-bilinear-vs-bicubic/

There are more advanced algorithmic methods.

E.g. widely used Lanczos interpolation or even more complicated fractal interpolation methods. They try to make out and preserve edges with borders on the image but all of them lack generality. In their focus is to find universal mathematical formulae to deal with different content and texture, and not to add much meaningful detail to the upscaled image to make it look realistic.

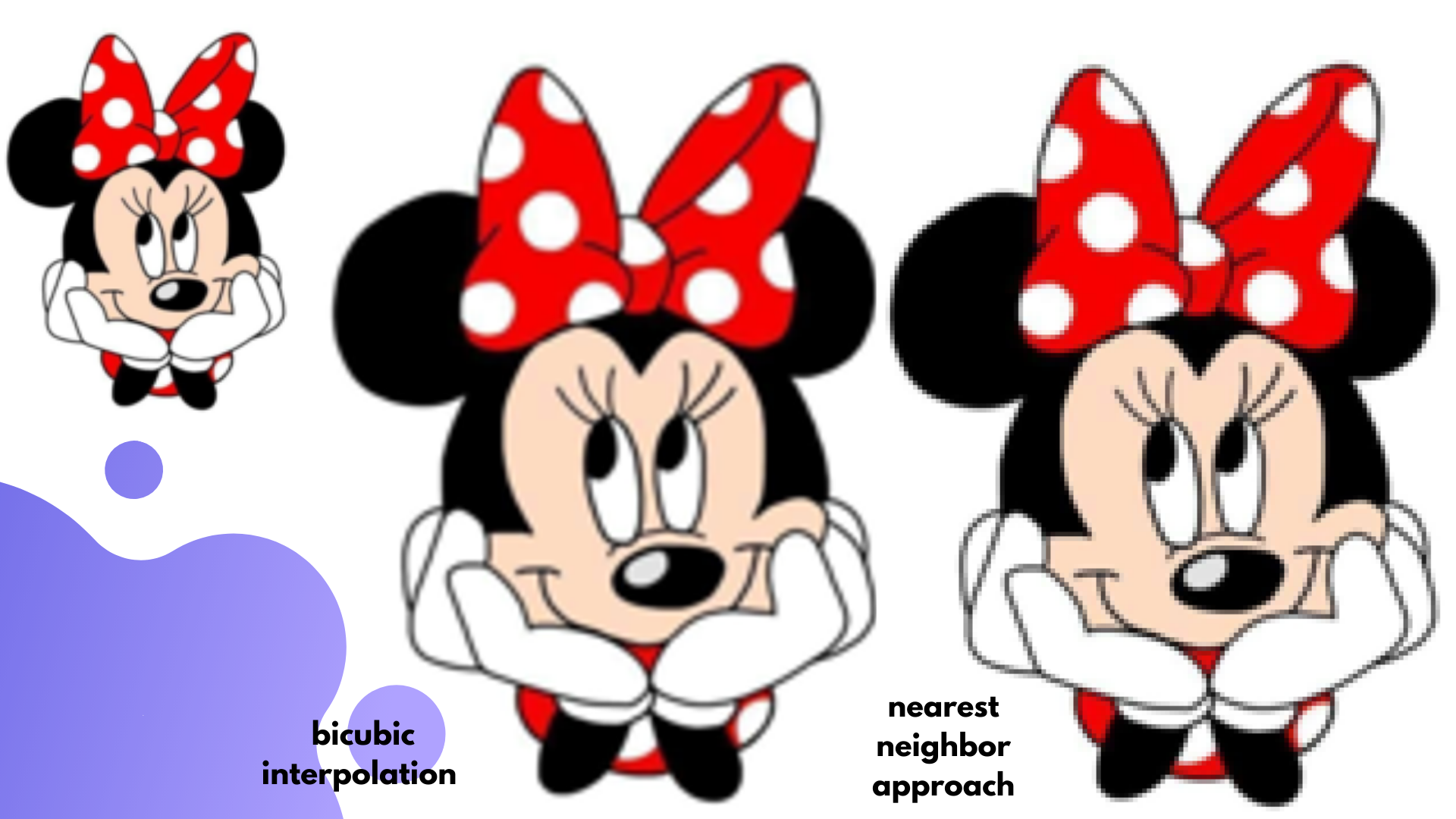

Moreover, bicubic and any other algorithmic interpolation do not help with the image compression artifacts like JPEG blocks, even worse - it enlarges them and makes more visible.

The problem of high-quality image upscaling cannot be solved without leading-edge technologies, among which are neural networks. Having significant "memorization" and generalization ability, a neural network trained on hundreds of thousands of of high-resolution and downscaled different real-world images. It brings an understanding of the underlying texture and objects to the image upscaling process. The neural networks can "hallucinate" completely new pixels on the enlarged image based on the examples of similar objects learned during the training. Images enriched with new detail thus look more realistic and plausible.

However, that alone does not make our "magic wand" truly magic. There's a difference between "academic" and "real" reactors.

Check it for more information.

"Academic" neural networks are trained on clean downscaled examples of high-resolution images. These examples are usually obtained by bicubic downsampling what is not really a good idea. The neural networks quickly guess the dependency between provided inputs and outputs.

For the folks who like science: it starts to solve an easier task by searching the solution on the manifold created by bicubic function.

"Real" images on the web also suffer from several different compression algorithms applied, camera noise, motion blur, etc. Academic studies usually do not consider image compression, especially a combination of multiple compression algorithms with different settings - the most common case around the web. All publicly available research and open-source usually works excellent with nice clean PNG images from well-known datasets. But they fail miserably on dirty real-world inputs corrupted by compression algorithms, camera noise, and low light during shooting.

Compression algorithms corrupt images by trying to reduce information contained in the images to shrink the file size. This makes the task for "real" algorithm even more difficult.

Therefore, neural networks should:

- understand context to remove (unknown) compression artifacts from the image, camera noise, and other real-world corruptions

- restore missing detail in compressed and corrupted images with reduced information contained in the input.

For the folks who like science:

The problem with compression can be interpreted as a harder "one-to-many" problem for neural network, as it needs to consider drawing many different variants of upscaled texture corresponding to the low-resolution compressed one. Indeed, if you're doing 4x upscale, you need to draw 4x4 = 16 new pixels for every single pixel on the low-resolution image. Still, if you have low-resolution information destroyed by JPEG compression to the degree of blurry square blocks, the number of possible upscales which may correspond to this block increases. Traditional neural networks are usually confused by "one-to-many" problem. They tend to output an average of possible outcomes appearing as blur on the output image.

What's under our hood?

We are a Deep Learning based photo processing product running on the cloud.In our upscaling process, we rely on a new powerful type of neural networks called GANs. It can create new realistically looking images out of thin air.

The same type of algorithm can be used to generate faces of people that never existed. Just take a look here.

Such network “learns” what a good quality image is and applies this knowledge to generate upscaled versions of the images you upload.

However, the way it works implies the original image and the downloaded one to be not 100% the same. They can be slightly different since GAN network uses some frequent patterns to generate missing features. It makes images not only larger in resolution, but also crispy, clean and ready to print or post on your website.

In addition, we study real images from our customers to understand their specificities and make our system work with such cases. We often get insanely corrupted images from Instagram. This social network compresses images a lot and allows only 600 x 600 resolution - the quality too low to be used for personal or business purposes.

GAN solves the problems mentioned above and successfully recovers compressed data from its context.This network chooses only the best for your photo. And that's absolutely fascinating.